Thank you! Your submission has been received

Samson Aligba

The Morning the Guess Became a Bug

The engineer had every reason to be confident.

She asked an AI assistant to add a new pause option to the subscription service. The generated code compiled. The tests passed. Customers could pause with a click. From the outside, the system looked improved by construction.

Two weeks later, support tickets spiked. Paused subscribers were still being charged. The billing logic treated “paused” exactly like “active,” because the coupling between subscription state and billing state had never been written in a form the model could see. The assistant produced a plausible implementation of an incomplete idea. Incompleteness turned into behavior. Behavior turned into complaints.

Inferred intent is indistinguishable from error — until surprise proves otherwise.

This is not an exotic edge case of autonomous agents. It happens the moment AI becomes a participant in writing code at all. The model is doing what language models do: maximize plausibility within the instructions shown. If purpose and limits live only in heads, the AI builds as though they don’t exist.

The Telephone Accelerates

Development has always been a game of telephone.

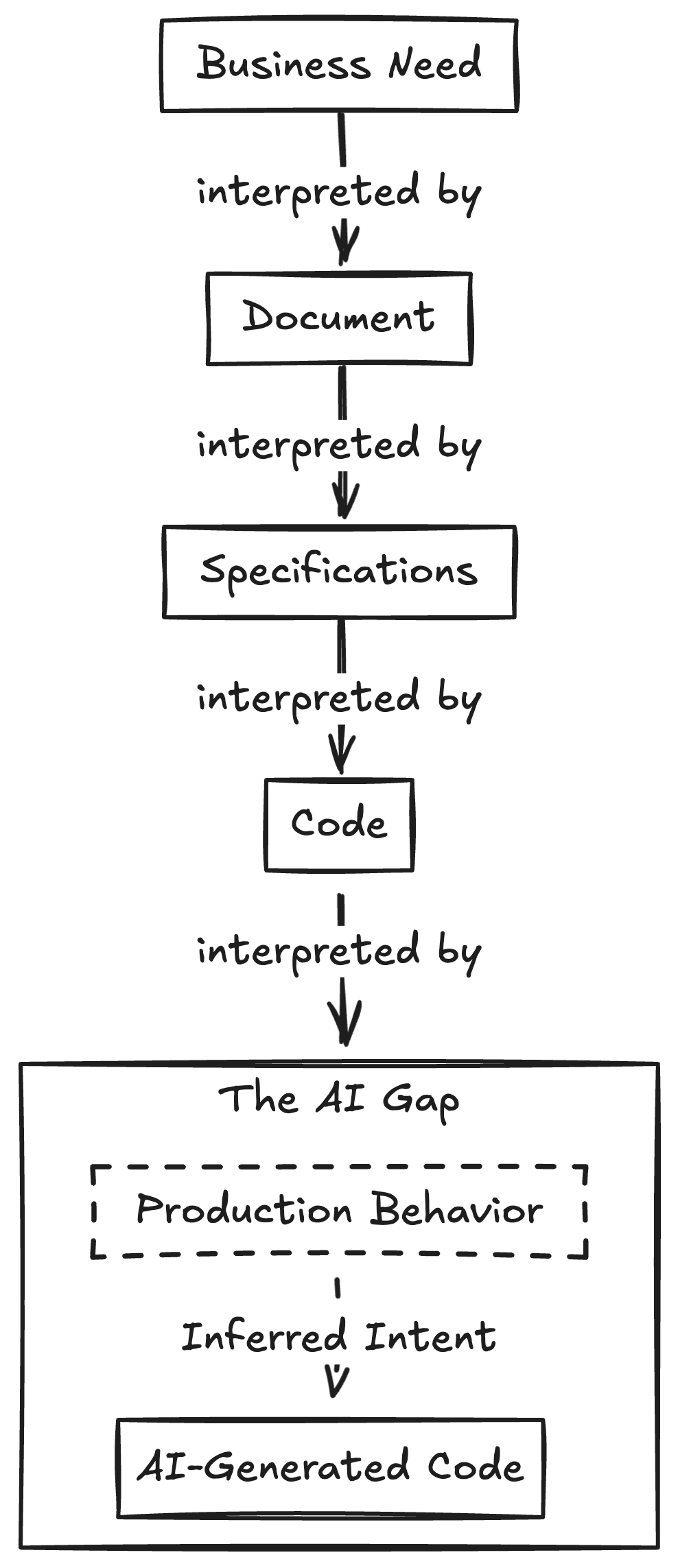

A business need becomes a document, becomes specifications, becomes code, becomes production behavior. Each handoff risks losing fidelity. Each translation can introduce drift.

Business Need → Document → Specifications → Code → Behavior

AI removes the friction without removing the telephone. A feature can be implemented before constraints are articulated. A refactor can be proposed before trade-offs are discussed. The assistant fills gaps confidently, because confidence is cheap even when understanding is not.

What used to be noticed during slow reviews now slips through at machine speed:

unstated assumptions, invisible couplings, safeguards that were load-bearing but never named as such.

Problems surface only after users complain or when an auditor asks what the system was authorized to do.

Memory Is Not Permission

The industry response has focused on recall.

Retrieval systems ingest files. Agents maintain session memory. Platforms track trajectories and personalization. This is good engineering; it helps models remember what happened.

But continuity is not authority.

Remembering that a refund was processed doesn’t tell you it was permitted.

Remembering a previous implementation doesn’t mean it respected sacred constraints.

Remembering a user request doesn’t specify which requests the system may act on.

Memory expands the space of what AI knows. It does nothing to restrict the space of what it is allowed to produce.

Without a durable boundary to compare against, the model’s guess about purpose becomes indistinguishable from actual purpose.

The Missing Brakes

It’s tempting to label this an “agentic AI” problem, something that only matters once you deploy autonomous systems running for hours. That framing lets most teams delay: “we’re not there yet.”

But the brake goes missing much earlier.

The developer who asks the assistant to “optimize this service” and receives code that removes an undocumented rate limit

The team that prototypes a feature rapidly and introduces a state machine that contradicts an implicit business rule

The senior engineer who trusts the assistant’s confident handling of an edge case, not realizing the assistant invented the edge case itself

Agents don’t create the problem. Speed reveals it.

And as agents participate more deeply, the tolerance for implicit understanding drops to zero.

Although tests are the ultimate brakes, with one caveat: a suite cannot fail what it never knew to test.

Tests = the operational floor.

Authority artifact = the semantic ceiling.

And when floor and ceiling disagree, the gap is paid in surprise.

A Place for “Meaning” to Live

The counter-pattern is intentionally simple:

Put what must hold in a document that lives outside any single conversation.

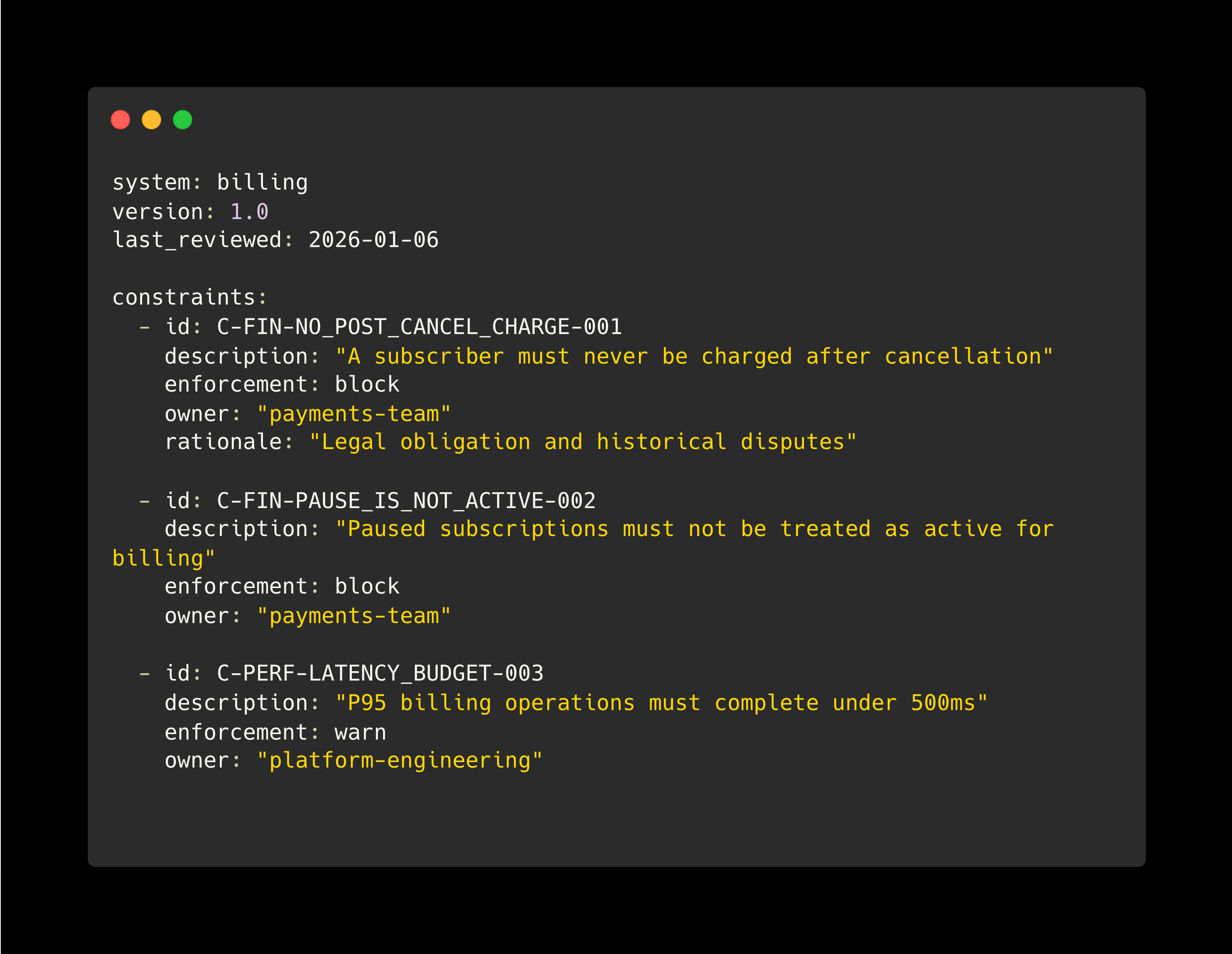

This resembles a configuration, but a configuration with semantic consequences. The difference is enforcement.

An illustration of this gap

A minimal billing artifact might specify:

The artifact is not magic. Discovery still requires observation and conversation. But once written, the boundary survives the telephone: it can be read by assistants, checked before merge, and create accountability when violated.

Enforcement Without Bureaucracy

The honest objection is familiar to any leader:

another policy document that rarely gets used.

The answer is scoped consequence rather than ceremonial maximalism.

One rule can matter.

The constraint C-FIN-NO_POST_CANCEL_CHARGE-001 does not require a fifty-page framework to be useful. It requires only:

a clear statement,

a named owner role,

a mechanism that checks it.

If the AI proposes billing code that couples “paused” to “active,” the check flags a BLOCK. Deployment halts until a human adjudicates: fix the code, update the boundary deliberately, or create a time-boxed exception with expiry.

Governance becomes operational when it can stop one dangerous action while everything else proceeds normally. The organization enumerates only the constraints where violation is unacceptable, not every imaginable preference.

That is a release valve teams can actually maintain.

Context Expands. Authority Restricts.

Two forces shape AI participation:

Context graphs widen the possible.

Semantic authority narrows the permissible.

True effectiveness requires both. Expanding context alone creates capable assistants that confidently build the wrong thing. Restraining output alone creates assistants that lack the understanding to help meaningfully.

The balance must be explicit: which constraints are sacred, which are strong preferences, which are observed for learning.

The Deeper Shift

Execution is becoming cheap.

Understanding remains expensive.

The gap between them is where surprises hide.

You will not notice this in sprint velocity charts. You will notice it in the shock — the feature that broke an assumption, the refactor that violated an invariant, the optimization that quietly removed a safeguard.

The choice is available now:

Let AI infer meaning from gaps,

or formalize what must hold before the first surprise that matters.

Guardrails begin with understanding written in a way that machines can read, not with prompts.